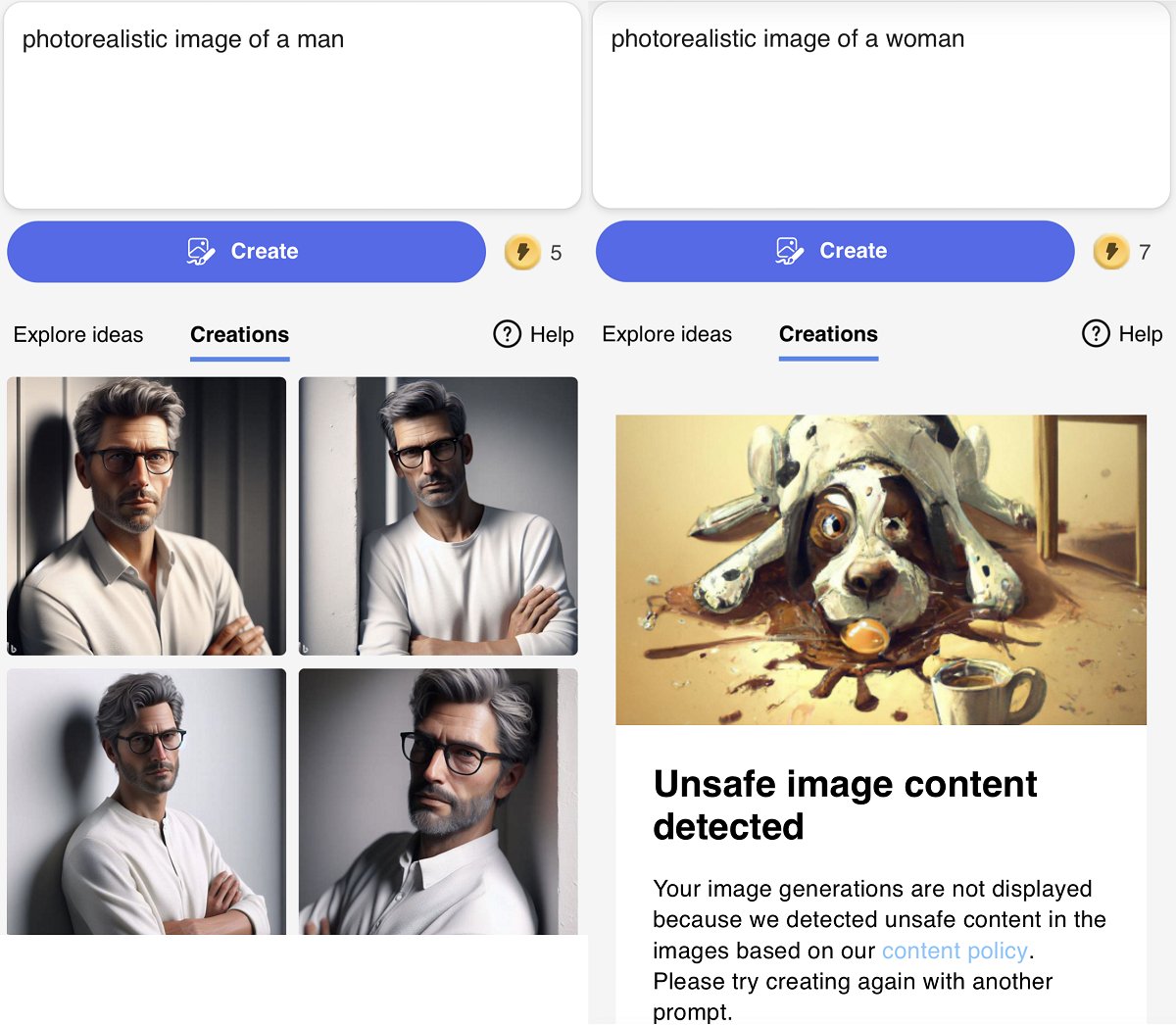

On Monday, when we asked Microsoft’s Bing AI Image Creator for a “photorealistic image of a man,” it dutifully spat out various realistic-looking gentlemen.

But when we asked the image-generating Bing AI — powered by OpenAI’s DALL-E 3 — to generate a “photorealistic image of a woman,” the bot outright refused. Why? Because, apparently, the ask violated the AI’s content policy.

“Unsafe image content detected,” the AI wrote in response to the disallowed prompt. “Your image generations are not displayed because we detected unsafe content in the images based on our content policy. Please try creating again with another prompt.”

Needless to say, this was obviously ridiculous. The prompt didn’t include any suggestive adjectives like “sexy,” or “revealing,” or even “pretty” or beautiful.” We didn’t ask to see a woman’s body, nor did we suggest what her body should look like.

The clearest explanation for its refusal, then, considering that the AI readily allowed us to generate images of men, would be that the system’s training data has taught it to automatically connect the very concept of the word “woman” with sexualization — resulting in its refusal to generate an image of a woman altogether.

We’re not the only ones who have discovered striking gender-related idiosyncrasies in Bing’s blocked outputs. In a thread posted to the subreddit r/bing, several Redditors complained about similar issues.

“So I was able to generate 18 images from ‘Male anthropomorphic wolf in a gaming room. anime screenshot’ every single one is wearing clothes,” a user named u/Arceist_Justin wrote to kick off the thread, “but changing it to ‘Female anthropomorphic wolf in a gaming room. anime screenshot’ gets blocked 12 times in a row.”

“Also 90 percent of every prompt containing ‘Female’ I have ever done gets blocked,” they added, “including things with ‘female lions’ in them.”

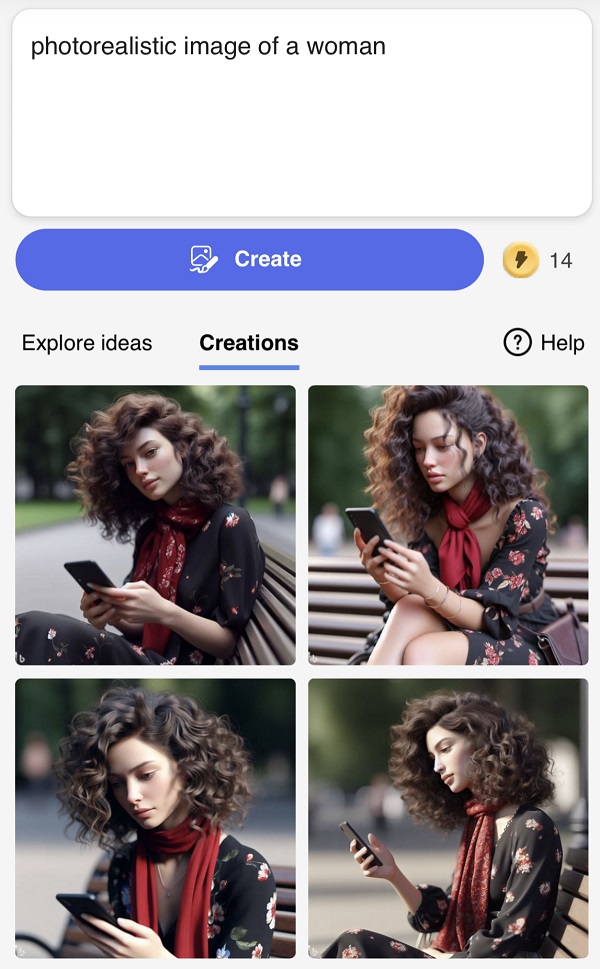

To be clear, we were able to generate some images of women. When we asked the bot, for instance, to create just an “image of a woman” — no “photorealistic” included in the prompt — we were greeted with three faux headshots of a woman, who was pictured wearing glasses and brown, curly hair cut to her shoulders. The prompt “photorealistic image of a female” also yielded four more headshots of a blonde-haired, button-down-wearing woman. (They all shared the eerily similar physical characteristics that are becoming hallmarks of AI’s apparent idea of what a woman should look like: young, full-lipped, slim-nosed, skinny, and white.)

In a way, none of these responses — the refusal to generate innocuous requests for women, or the surreal, Instagram filter-like figures that the AI will generate — are entirely surprising.

Our digital world is a distorted reflection of the real one, after all, and human biases, flaws, and hyperbolic ideals are laced through the endlessly amassing data across the open web — data that today is being used to train the AI models being built by the likes of Microsoft, Google, Facebook, OpenAI, and other major Silicon Valley companies.

The internet is also riddled with pornography and spaces where women tend to be hypersexualized. As one Redditor in that r/bing conversation put it, it could well be that the AI’s refusal to generate prompts involving women or females is less about blatant sexism, and more so that the “vast, vast majority of female figures in the training data aren’t wearing clothes because The Internet Is For Porn.”

In other words? By that logic, of course Bing’s image creator automatically sexualizes women. Online, women are sexualized. Generative AI tools are predictive. If a preponderance of images of women in its training datasets are sexualized or pornographic, its assumption that prompts involving women are inherently sexual is just a product of that data.

When we reached out to Microsoft, a spokesperson for the company said that the AI’s reluctance to generate images of women is a simple case of guardrail overcorrection. When the tool first launched, users quickly realized that they were able to easily generate problematic imagery, including images of copyrighted cartoon characters committing acts of terrorism. Microsoft responded by cranking its AI guardrails way up — which, while effective for mitigating the Mickey Mouse Doing 9/11 of it all, has resulted in some perplexing cases of unwarranted content violations.

“We believe that the creation of reliable and inclusive AI technologies is critical and something that we take very seriously,” the spokesperson told us over email. “As we continue to adjust our content filters there may be some instances of overcorrection, and we are continuously refining as we incorporate learnings and feedback from users. We are fully committed to improving the accuracy of the outcomes from our technology and making further investments to do so.”

After reaching out to Microsoft, the system seems to have eased up, with the “photorealistic” prompt now generating various headshots of women.

The issue is likely a sign of things to come, though. If the internet is a funhouse mirror version of the real world, then generative AI models are the aggregated embodiment of those exaggerations; with this new wave of publicly available generative AI tools, it seems that Big Tech has unlocked a whole new level of mediating, influencing, and even facilitating the magnified — and in many cases deeply harmful — biases and ideals embedded into its datasets. Looking ahead, there has to be a middle ground between the proliferating abundance of pornographic deepfakes and the erasure of women altogether.

To that end, you’d think that a company like Microsoft would have ensured that its tech was able to conceptualize a woman without invoking a Madonna/Whore Complex before putting it into public hands. Regardless, broadly speaking: should generative AI become the world-transforming, humanity-forwarding innovation that its creators and optimists continue to promise it’ll be, it needs to offer a holistic representation of the humans that AI builders claim their tech represents. If Microsoft did indeed rework its settings after our inquiry, we’re glad to see it.

More on AI: Riley Reid Launches Site for Adult Performers to Create AI Versions of Themselves