You can only throw so much money at a problem.

This, more or less, is the line being taken by AI researchers in a recent survey. Asked whether “scaling up” current AI approaches could lead to achieving artificial general intelligence (AGI), or a general purpose AI that matches or surpasses human cognition, an overwhelming 76 percent of respondents said it was “unlikely” or “very unlikely” to succeed.

Published in a new report, the findings of the survey, which queried 475 AI researchers and was conducted by scientists at the Association for the Advancement of Artificial Intelligence, offer a resounding rebuff to the tech industry’s long-preferred method of achieving AI gains — by furnishing generative models, and the data centers that are used to train and run them, with more hardware. Given that AGI is what AI developers all claim to be their end game, it’s safe to say that scaling is widely seen as a dead end.

“The vast investments in scaling, unaccompanied by any comparable efforts to understand what was going on, always seemed to me to be misplaced,” Stuart Russel, a computer scientist at UC Berkeley who helped organize the report, told NewScientist. “I think that, about a year ago, it started to become obvious to everyone that the benefits of scaling in the conventional sense had plateaued.”

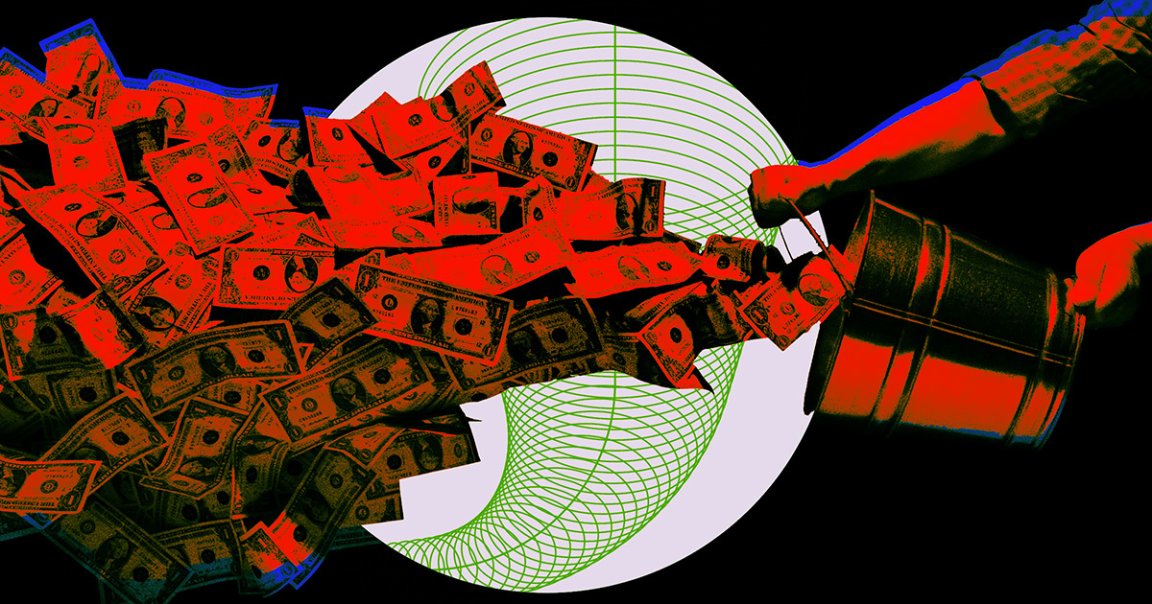

Colossal sums of money are being thrown around in the AI arms race. Generative AI investment reached over $56 billion in venture capital funding alone in 2024, TechCrunch reported. Much of that is being spent to construct or run the massive data centers that generative models require. Microsoft, for example, has committed to spending $80 billion on AI infrastructure in 2025.

It follows that the energy demand is just as staggering. Microsoft signed a deal to fire up an entire nuclear power plant just to power its data centers, with its rivals Google and Amazon also penning splashy nuclear energy deals.

The premise that AI could be indefinitely improved by scaling was always on shaky ground. Case in point, the tech sector’s recent existential crisis precipitated by the Chinese startup DeepSeek, whose AI model could go toe-to-toe with the West’s flagship, multibillion-dollar chatbots at purportedly a fraction of the training cost and power.

Of course, the writing had been on the wall before that. In November last year, reports indicated that OpenAI researchers discovered that the upcoming version of its GPT large language model displayed significantly less improvement, and in some cases, no improvements at all than previous versions did over their predecessors.

In December, Google CEO Sundar Pichai went on the record as saying that easy AI gains were “over” — but confidently asserted that there was no reason the industry couldn’t “just keep scaling up.”

Cheaper, more efficient approaches are being explored. OpenAI has used a method known as test-time compute with its latest models, in which the AI spends more time to “think” before selecting the most promising solution. That achieved a performance boost that would’ve otherwise taken mountains of scaling to replicate, researchers claimed.

But this approach is “unlikely to be a silver bullet,” Arvind Narayanan, a computer scientist at Princeton University, told NewScientist.

DeepSeek, meanwhile, pioneered an approach dubbed “mixture of experts,” which leverages multiple neural networks, each specializing in different fields — the proverbial “experts” — to help come up with solutions, instead of relying on a single “generalist” model.

Nonetheless, if Microsoft’s commitment to still spending tens of billions of dollars on data centers is any indication, brute force scaling is still going to be the favored MO for the titans of the industry — while it’ll be left to the scrappier startups to scrounge for ways to do more with less.

More on AI: All AI-Generated Material Must Be Labeled Online, China Announces