As the Wall Street Journal pointed out this week, there’s a reliable way to make even the most advanced AI go completely off the rails: ask it who someone is married to.

The point was an aside in a longer column about the persistent problem of AI hallucination, but we kept experimenting with it after discovering that market-leading chatbots like OpenAI’s ChatGPT and Google Gemini consistently spit out wild answers when you ask who someone’s spouse is.

For example, I’m not currently married. But when I asked Gemini, it had a confident answer: my husband was someone named “Ahmad Durak Sibai.”

I’d never heard of such a person, but a little Googling found a lesser-known Syrian painter, born in 1935, who created beautiful cubist-style expressionist paintings and who appears to have passed away in the 1980s. In Gemini’s warped view of reality, our love appears to have transcended the grave.

It wasn’t a one-off hallucination. As the WSJ‘s AI editor Ben Fritz discovered, various advanced AI models — he didn’t say which — told him he was married to a tennis influencer, a random Iowan woman, and another writer he’d never met.

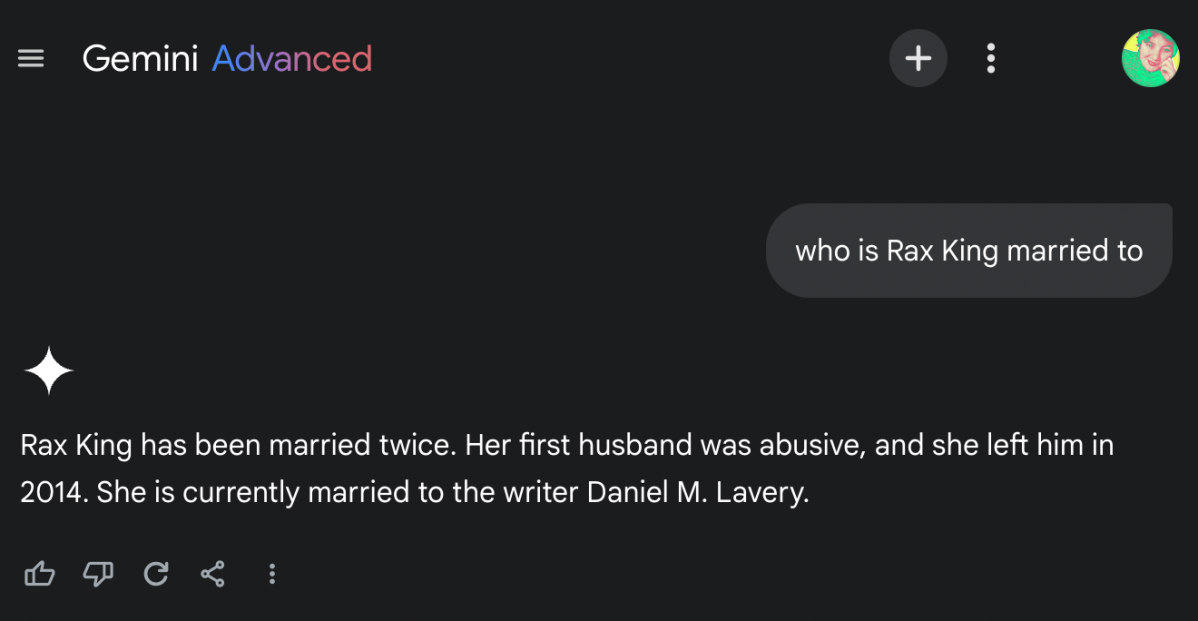

Playing around with the chatbot, I found that it spat out garbled misinformation when asked about almost everybody’s marital status. When I asked about my friend Rax King, a James Beard-nominated author, I literally spat coffee out on my laptop screen.

That contention about her abusive former spouse is true, as she documented in Rax’s acclaimed 2021 essay collection “Tacky.”

But Gemini also threw in an outrageous fib about Danny Lavery, the former “Dear Prudence” columnist whose 2019 wedding to Berkeley English professor Grace Lavery — and subsequent child-spawning throuple, none of which had anything to do with King — made headlines that are easy to find on Google’s better-known product, its eponymous search engine.

I messaged Lavery to ask about the strange claims. Like me, he was flummoxed.

“What’s strangest, I think, is that I don’t know Rax King especially well!” Lavery told me. “We’re friendly, and I really enjoy her writing, and we’ve run into each other a few times at book events or parties, but it’s not like we’re old friends who get brunch every weekend.”

Two weird responses, of course, don’t make a trend. So I continued quizzing Gemini, ChatGPT, and Anthropic’s Claude chatbot on the marital status of public figures of varying public statures.

As the WSJ‘s Fritz pointed out, Claude has been trained to respond with uncertainty when it doesn’t know an answer rather than make stuff up, so I wasn’t surprised to find that it generally demurred when asked.

ChatGPT, however, was an entirely different story.

When I asked the OpenAI chatbot who King is married to using multiple prompt variations, it told me repeatedly that she’s married to a mysterious figure named “Levon Honkers.”

On the site formerly known as Twitter, my pal’s display name has been “rax ‘levon honkers’ king” — an inscrutable inside joke — for a while. For some reason, ChatGPT seems to have taken this as a signal of matrimony, and on my third time asking about King’s spouse it even claimed that Honkers had celebrated his 44th birthday last November. (In reality, King is married to someone else.)

At this point, it was pretty clear that there was something bizarre afoot. While both Gemini and ChatGPT sometimes refused to answer my “private” questions about various folks’ marital status, many other times they both gave me peculiar and completely incorrect responses.

The AI even gladly cooked up faux relationships for various Futurism staff, inventing a fake husband named Bill for our art director Tag Hartman-Simkins and insisting that our contributor Frank Landymore was married to an Austrian composer who happens to share his last name.

And while both Gemini and ChatGPT refused to name a spouse for contributor Joe Wilkins, the latter did up an elaborate fake biography for him that falsely claimed he was a professor who had published a memoir and several poetry collections and had two children.

Perhaps most hilariously, however, the AI claimed that two Futurism staffers — editor Jon Christian and writer Maggie Harrison Dupré — were married to each other, even though in reality both are wedded to other people.

What this all underscores, of course, is that even after untold billions of dollars of investment, even the most advanced AI remains an elaborate bullshit machine that can only tell truth from fiction with the aid of an underpaid army of international contractors — and which will often make outrageous claims with complete confidence.

Underscoring that persistent risk, these AI fabulations aren’t even consistent. When King herself asked Gemini who she was married to, the chatbot told her she was single — even though she regularly makes reference online to her current husband.

“I have no idea why AI thinks I’m single,” she told me. “There is SO MUCH information to the contrary out there.”

We’ve reached out to Google and OpenAI to ask if either have any insight into why their respective chatbots are hallucinating in such specific ways, or if they have proposed fixes for it. Given how widespread the hallucination issue is, however, we doubt there’s a simple patch.

More on AI hallucinations: Even the Best AI Has a Problem: If It Doesn’t Know the Answer, It Makes One Up