Two Tesla influencers were riding in a brand new Model Y “Juniper,” a refreshed version of the automaker’s most popular car. Sitting in the driver’s seat was the content creator “Bearded Tesla Guy,” who’d just begun a coast-to-coast road trip with his friend to put the vehicle’s “Full Self-Driving” tech to a continent-spanning test.

They barely made it 60 miles.

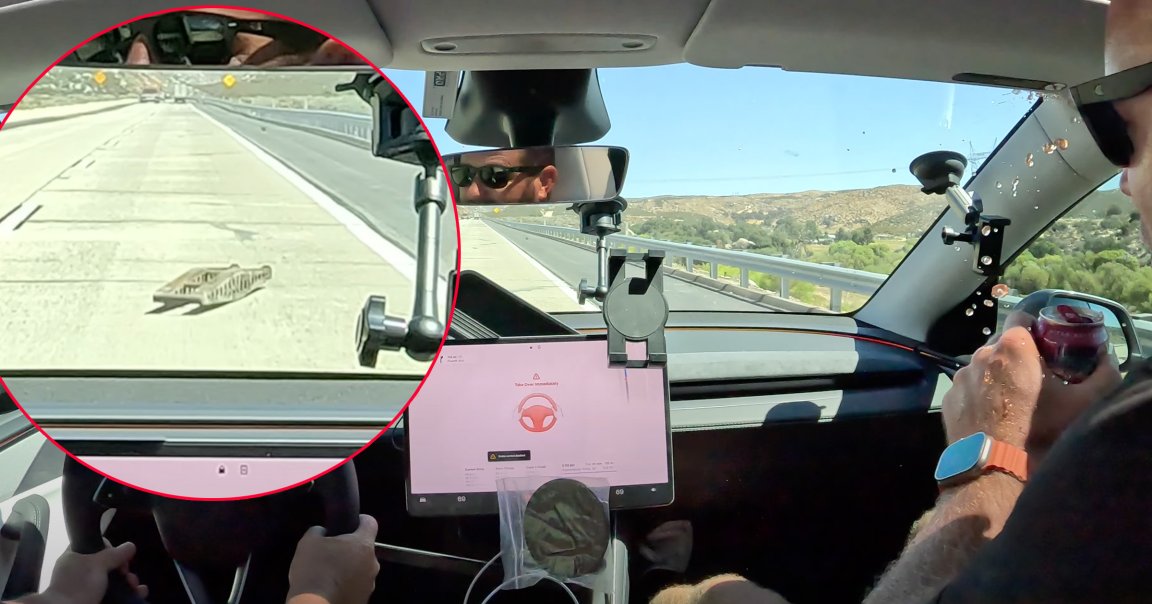

In a video shared by Bearded Tesla and spotted by Electrek, a small obstacle is clearly visible on the stretch of freeway ahead. At first, the passenger remarks it “looks like roadkill.” But as they speed toward the object, it becomes obvious that it’s something human-made and sturdy.

The Full Self-Driving software doesn’t seem to take notice, though. The Tesla barrels over the object and bounces from the road dramatically. After considerable airtime, it makes a hard landing, and the driver finally intervenes by forcing the car to pull over.

They hadn’t even left California, and the car had already crashed.

“This is not how I anticipated it starting,” Bearded Tesla can heard saying in the video.

The object, it turned out, was some kind of metal girder that likely fell off the back of a truck. No one was hurt, but the vehicle took a beating. According to Electrek, the road-tripping pair confirmed in a follow-up video that the Model Y had a broken sway bar bracket and damaged suspension.

It’s yet another incident that should raise serious questions about the capabilities of Tesla’s self-driving software, which CEO Elon Musk has repeatedly insisted is capable of driving by itself. Making this crash particularly ironic, Musk nearly a decade ago said that by 2018, Teslas would be able to drive themselves across the entire country — without even a driver inside supervising it.

Despite its name implying otherwise, the driving mode still requires constant human supervision and isn’t considered to be autonomous. The California DMV sued Tesla for this spurious naming scheme a few years back, accusing the automaker of false advertising. Officially, Tesla has updated FSD’s name to “Full Self-Driving (Supervised),” but it’s a ludicrous oxymoron and a name that’s rarely spoken in full anyway, allowing it to get away with the impression that its cars are as capable as human drivers even when the fine print clearly states otherwise.

Tesla cars running Full Self-Driving or its no-less-misleading cousin Autopilot have been involved in hundreds of accidents, some of them deadly, and this one in particular brings to mind Musk’s stubborn refusal to use lidar sensors — which he once called a “crutch” — to help his cars see. Instead, he’s embraced a “vision only” approach that relies solely on the car’s cameras to detect its surroundings, something critics say could be the automaker’s undoing.

Yes, the driver should’ve intervened here. But the tech also should’ve realized a pretty large obstacle on the road ahead. It made no attempt to drive around the object or even apply the brakes. Maybe if it had other sensors that don’t get blinded by sunlight, it would’ve.

“I’m speechless on what just happened,” the cameraman said. “Absolutely speechless.”

More on Tesla: Self-Driving Teslas Keep Driving Into the Path of Oncoming Trains