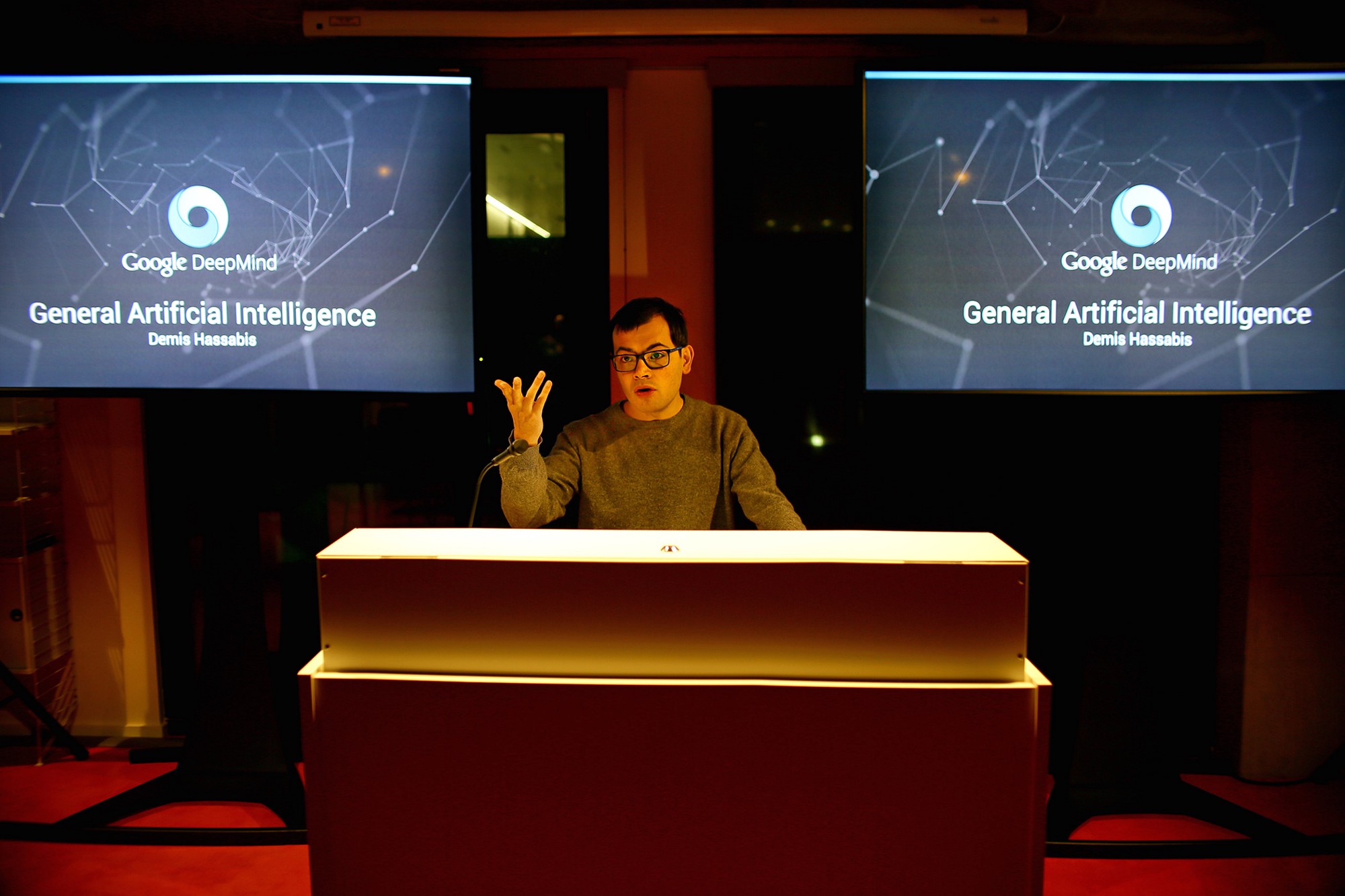

In the race to recruit the best AI talent, Google scored a coup by getting the team led by a former video game guru and chess prodigy

From the day in 2011 that Demis Hassabis co-founded DeepMind-with funding by the likes of Elon Musk-the UK-based artificial intelligence startup became the most coveted target of major tech companies. In June 2014, Hassabis and his co-founders, Shane Legg and Mustafa Suleyman, agreed to Google’s purchase offer of $400 million. Late last year, Hassabis sat down with Backchannel to discuss why his team went with Google-and why DeepMind is uniquely poised to push the frontiers of AI. The interview has been edited for length and clarity.

[Steven Levy] Google is an AI company, right? Is that what attracted you to Google?

[Hassabis] Yes, right. It’s a core part of what Google is. When I first started here I thought about Google’s mission statement, which is to organize the world’s information and make it universally accessible and useful. And one way I interpret that is to think about empowering people through knowledge. If you rephrase it like that, the kind of AI we work on fits very naturally. The artificial general intelligence we work on here automatically converts unstructured information into useful, actionable knowledge.

Were your interactions with Larry Page a big factor in your decision to sell to Google?

Yes, a really big factor. Larry specifically and other people were genuinely interested in AI as a cool thing. Many big companies realize the power of AI now and want to do some AI, but I don’t think they’re as passionate about it as we are or Google is.

So even though Facebook may have super intelligent leadership, Mark [Zuckerberg] might see AI as more of a tool than a mission in a larger sense?

Right, yes. That may change over time. I certainly believe AI is one of the most important things humanity can work on but he hasn’t got a deep rooted interest in it that someone like Larry has. He’s interested in other things- connecting people is his mission. And he’s interested in very cool things like Oculus and stuff like that. I used to do computer games and graphics and that stuff but it’s not as important to me as AI.

How big of a boost is it to use Google’s infrastructure?

It’s huge. That’s another big reason we teamed up with Google. We had tons of venture money and amazing backers, but to build the computer infrastructure and engineering infrastructure that Google had would have taken a decade. Now we can do our research much quickly because we can run a million experiments in parallel.

The big leap you are making is not only to dig into things like structured databases but to analyze unstructured information – such as documents or images on the Internet – and be able to make use of them as well, right?

Exactly. That’s where the big gains are going to be in the next few years. I also think the only path to developing really powerful AI would be to use this unstructured information. It’s also called unsupervised learning- you just give it data and it learns by itself what to do with it, what the structure is, what the insights are. We are only interested in that kind of AI.

One of the people you work with at Google is Geoff Hinton, a pioneer of neural networks. Has his work been crucial to yours?

Sure. He had this big paper in 2006 that rejuvenated this whole area. And he introduced this idea of deep neural networks-Deep Learning. The other big thing that we have here is reinforcement learning, which we think is equally important. A lot of what Deep Mind has done so far is combining those two promising areas of research together in a really fundamental way. And that’s resulted in the Atari game player, which really is the first demonstration of an agent that goes from pixels to action, as we call it.

What was different about your approach to research here?

We called the company Deep Mind, obviously, because of the bet on deep learning. But we also were deeply interested in getting insights from neuroscience.

I imagine that the more we learn about the brain, the better we can create a machine approach to intelligence.

Yes. The exciting thing about these learning algorithms is they are kind of meta level. We’re imbuing it with the ability to learn for itself from experience, just like a human would do, and therefore it can do other stuff that maybe we don’t know how to program. It’s exciting to see that when it comes up with a new strategy in an Atari game that the programmers didn’t know about. Of course you need amazing programmers and researchers, like the ones we have here, to actually build the brain-like architecture that can do the learning.

In other words, we need massive human intelligence to build these systems but then we’ll –

… build the systems to master the more pedestrian or narrow tasks like playing chess. We won’t program a Go program. We’ll have a program that can play chess and Go and Crosses and Drafts and any of these board games, rather than reprogramming every time. That’s going to save an incredible amount of time. Also, we’re interested in algorithms that can use their learning from one domain and apply that knowledge to a new domain. As humans, if I show you some new board game or some new task or new card game, you don’t start from zero. If you know to play bridge and whist and whatever, I could invent a new card game for you, and you wouldn’t be starting from scratch-you would be bringing to bear this idea of suits and the knowledge that a higher card beats a lower card. This is all transferable information no matter what the card game is.

Would each program be limited – like one that plays lots of card games – or are you thinking of one massive system that learns how to do everything?

Eventually something more general. The idea for our research program is to slowly widen and widen those domains. We have a prototype of this – the human brain. We can tie our shoelaces, we can ride cycles and we can do physics with the same architecture. So we know this is possible.

Tell me about the two companiesBut all of this research would all eventually be part of the same engine. , both out of Oxford University, that you just bought. What products at Google is your team looking to improve?

These Oxford guys are amazingly talented groups of professors. One team [formerly Dark Blue Labs] will focus on natural language understanding, using deep neural networks to do that. So rather than the old kind of logic techniques for NLP, we’re using deep networks and word embeddings and so on. That’s led by Phil Blunsom. We’re interested in eventually having language embedded into our systems so we can actually converse. At the moment they are obviously prelinguistic-there is no language capability in there. So we’ll see all of those things marrying up. And the second group, Vision Factory, is led by Andrew Zisserman, a world famous computer vision guy.

When will we see this happening?

Yeah. Eventually all of those things become part of one bigger system.

How about video search?

We still feel quite new to Google, but there’s tons of things we could apply parts of our technology to. We’re looking at various aspects of search. We’re looking at stuff like YouTube recommendations. We’re thinking about making Google Now better in terms of how well it understands you as an assistant and actually understands more about what you’re trying to do. We’re looking at self-driving cars and maybe helping out with that.

What do you hope to do for Google in the long run?

In six months to a year’s time we’ll start seeing some aspects of what we’re doing embedded in Google Plus, natural language and maybe some recommendation systems.

What did you think of the movie HerYou’ve had successful experiments, but how difficult is it to build those into a system that hundreds of millions of people will use? ? You had a fantastic career in the gaming world and you left it because you felt you had to learn about the brain.

That’s another big thing-do you want to type in actions like someone kicking a ball or smoking or something like that? The Vision group is working on those kinds of questions. Action recognition, not just image recognition.

But you were at the top of the game world – you worked on huge hits like Black and White and founded Elixir StudiosIn your years of studying the brain, what was the biggest takeaway as you started an AI company? – and you just thought, “OK, time to study neuroscience?” Why?

I’m really excited about the potential for general AI. Things like AI-assisted science. In science, almost all the areas we would like to make more advances in-disease, climate, energy, you could even include macroeconomics- are all questions of massive information, almost ridiculous amounts. How can human scientists navigate and find the insights in all of that data? It’s very hard not just for a single scientist, but even a team of very smart scientists. We’re going to need machine learning and artificial intelligence to help us find insights and breakthroughs in those areas, so we actually really understand what these incredibly complex systems are doing. I hope we will be linking into various efforts at Google that are looking at these things, like Calico or Life Sciences.

When you talk about the algorithms of the brain, is that strictly in the metaphoric sense or are you talking something more literal?

I loved it aesthetically. It’s in some ways a positive take on what AI might become and it had interesting ideas about emotions and other things in computers. I do think it’s sort of unrealistic, in that there was this very powerful AI out there but it was stuck on your phone and just doing fairly everyday things. Whereas it should have been revolutionizing science and…there wasn’t any evidence of anything else going on in the world that was very different, right?

Because we don’t fully understand the brain, it seems difficult to take this approach all the way. Do you think there’s something that’s “wet” that you can’t do in silicon?

It’s a multi-step process. You start with the research question and find that answer. Then we do some major neuroscience and then we look at it in machine learning and we implement a practical system that can play Atari really well and then that’s ready to scale. Here at Deep Mind about three quarters of the team is research but one quarter is applied. That team is the interface between the research that gets done here and the rest of Google’s products.

Yeah. Actually my whole career, including my games career, has been leading up to the AI company. Even in my early teens I decided that AI was going to be the most interesting to work on and the most important thing to work on.

It was more like, “Let’s see how far I can push AI under the guise of games. So Black & White was probably the pinnacle of that, then it was Theme Parkand Republic and these other things that we tried to write. And then around 2004-2005, I felt we’d pushed AI as far as it could go within the constraints of the very tight commercial environment of games. And I could see that games were going to go more towards simpler games and mobile – as they have done- and so actually there would be less chance to work on a big AI project within a game project. So then I started thinking about Deep Mind – this is 2004 – but I realized that we still didn’t have enough of the components to make fast progress. Deep Learning hadn’t appeared at that point. Computing power wasn’t powerful enough. So I looked at which field should I do my PhD in and thought it would be better to do it in neuroscience than in AI, because I wanted to learn about a whole new set of ideas and I already knew world-class AI people.

Lots of things. One is reinforcement learning. Why do we believe that that’s an important core component? One thing we do here is look into neuroscience inspiration for new algorithms and also validation of existing algorithms. Well it turns out in the late ’90s, Peter Dayan and colleagues were as involved in an experiment using monkeys, which showed that their neurons were really doing reinforcement learning when they were learning about things. Therefore it’s not crazy to think that that could be a component of an overall AI system. When you’re in the dark moments of trying to get something working, it’s useful to have that additional information-to say, “We’re not mad, this will really work, we know this works-we just need to try harder.” And the other thing is the hippocampus. That’s the brain area I studied, and it’s the most fascinating.

Deep Learning is about essentially [mimicking the] cortex. But the hippocampus is another critical part of the brain and it’s built very differently, a much older structure. If you knock it out, you don’t have memories. So I was fascinated how this all works together. There’s consolidation [between the cortex and the hippocampus] at times like when you’re sleeping. Memories you’ve recorded during the day get replayed orders of magnitude faster back to the rest of the brain. We used this idea of memory replay in our Atari agent. We replayed trajectories of experiences that the agent had had during the training phase and it got the chance to see that hundreds and hundreds and hundreds times again, so it could get really good at that particular bit.

It’s more literal. But we’re not going build specifically an artificial hippocampus. You want to say, what are the principles of that? [We’re ultimately interested in the] functionality of intelligence, not specifically the exact details of the specific prototype that we have. But it’s a mistake also to ignore the brain, which a lot of machine learning people do. There are hugely important insights and general principles that you can use in your algorithms.

I looked at this very carefully for a while during my PhD and before that just to check where this line should be drawn. [Roger] Penrose has quantum consciousness [which postulates there are quantum effects in the mind that computers can’t emulate]. Beautiful story, right? You wish it’s sort of true, right? But it all collapses. There doesn’t seem to be any evidence. Very top biologists have looked carefully for quantum effects in the brain and there just didn’t seem to be any. As far as we know it’s just a classical computation device.

What’s the big problem you’re working on now?

The big thing is what we call transfer learning. You’ve mastered one domain of things, how do you abstract that into something that’s almost like a library of knowledge that you can now usefully apply in a new domain? That’s the key to general knowledge. At the moment, we are good at processing perceptual information and then picking an action based on that. But when it goes to the next level, the concept level, nobody has been able to do that.

So how do you go about doing that?

We have several promising projects on that which we’re not ready to announce yet.

One condition you set on the Google purchase was that the company set up some sort of AI ethics board. What was that about?

It was a part of the agreement of the acquisition. It’s an independent advisory committee like they have in other areas.

Why did you do that?

I think AI could be world changing, it’s an amazing technology. All technologies are inherently neutral but they can be used for good or bad so we have to make sure that it’s used responsibly. I and my cofounders have felt this for a long time. Another attraction about Google was that they felt as strongly about those things, too.

What has this group done?

Certainly there is nothing yet. The group is just being formed – I wanted it in place way ahead of the time that anything came up that would be an issue. One constraint we do have- that wasn’t part of a committee but part of the acquisition terms-is that no technology coming out of Deep Mind will be used for military or intelligence purposes.

Do you feel like a committee really could make an impact on controlling a technology once you bring it into the world?

I think if they are sufficiently educated, yes. That’s why they’re forming now, so they have enough time to really understand the technical details, the nuances of this. There are some top professors on this in computation, neuroscience and machine learning on this committee.

And the committee is in place now?

It’s formed yes, but I can’t tell you who is on it.

Why not?

Well, because it’s confidential. We think it’s important [that it stay out of public view] especially during this initial ramp-up phase where there is no tech- I mean we’re working on computing Pong, right? There are no issues here currently but in the next five or ten years maybe there will be. So really it’s just getting ahead of the game.

Will you eventually release the names?

Potentially. That’s something also to be discussed.

Transparency is important in this too.

Sure, sure. There are lots of interesting questions that have to be answered on a technical level about what these systems are capable of, what they might be able to do, and how are we going to control those things. At the end of the day they need goals set by the human programmers. Our research team here works on those theoretical aspects partly because we want to advance [the science], but also to make sure that these things are controllable and there’s always humans in the loop and so on.

Google’s Secret Study To Find Out Our Needs

To improve search, ask people what they don’t ask for themselvesmedium.com Google Search Will Be Your Next Brain

Inside Google’s massive effort in Deep Learning, which could make already-smart search into scary-smart searchmedium.com