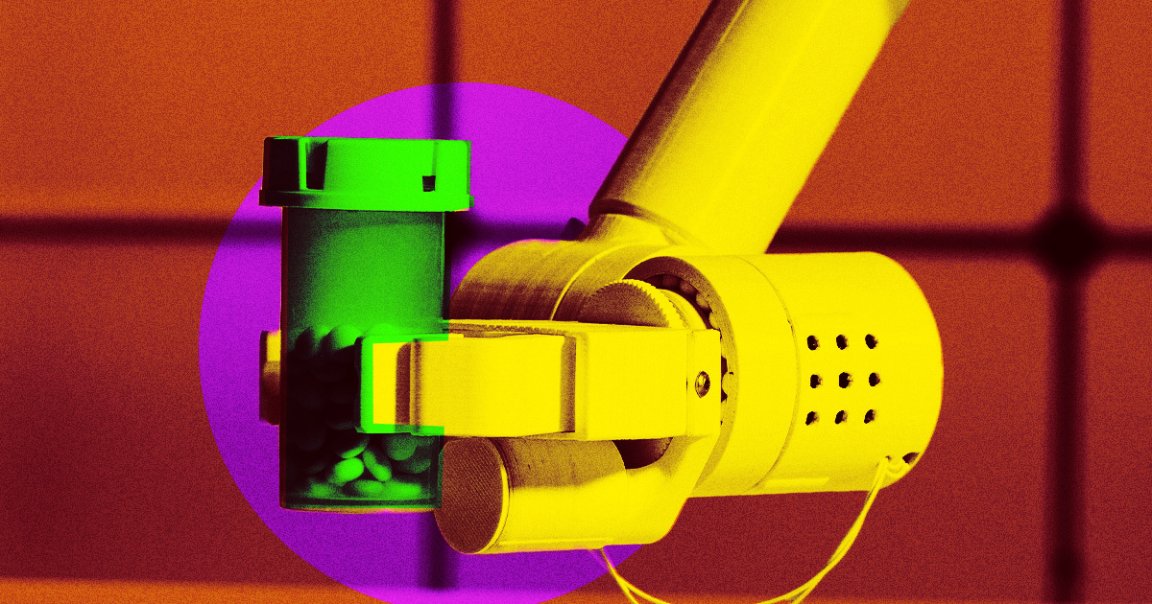

If you weren’t convinced we’re spiraling toward an actual cyberpunk future, a new bill seeking to let AI prescribe controlled drugs just might.

The proposed law was introduced in the House of Representatives by Arizona’s David Schweikert this month, where it was referred to the House Committee on Energy and Commerce for review. Its purpose: to “amend the Federal Food, Drug, and Cosmetic Act to clarify that artificial intelligence and machine learning technologies can qualify as a practitioner eligible to prescribe drugs.”

In theory, it sounds good. Engaging with the American healthcare system often feels like hitting yourself with a slow-motion brick, so the prospect of a perfect AI-powered medical practitioner that could empathically advise on symptoms, promote a healthy lifestyle, and dispense crucial medication sounds like a promising alternative.

But in practice, today’s AI isn’t anywhere near where it’d need to be to provide any of that, nevermind prescribing potentially dangerous drugs, and it’s not clear that it’ll ever get there.

Schweikert’s bill doesn’t quite declare a free-for-all — it caveats that these robodoctors could only be deployed “if authorized by the State involved and approved, cleared, or authorized by the Food and Drug Administration” — but downrange, AI medicine is clearly the goal. Our lawmakers evidently feel the time — and money — is right to remove the brakes and start letting AI into the health care system.

The Congressman’s optimism aside, AI has already fumbled in healthcare repeatedly — like the time an OpenAI-powered medical record tool was caught fabricating patients’ medical histories, or when a Microsoft diagnostic tool confidently asserted that the average hospital was haunted by numerous ghosts, or when an eating disorder helpline’s AI Chatbot went off the rails and started encouraging users to engage in disordered eating.

Researchers agree. “Existing evaluations are insufficient to understand clinical utility and risks because LLMs [large language models] might unexpectedly alter clinical decision making,” reads a critical study from medical journal The Lancet, adding that “physicians might use LLMs’ assessments instead of using LLM responses to facilitate the communication of their own assessments.”

There’s also a social concern: today’s AI is notoriously easy to exploit, meaning patients would inevitably try — and likely succeed — to trick AI doctors into prescribing addictive drugs without any accountability or oversight.

For what it’s worth, Schweikert used to agree. In a blurb from July of last year, the Congressman is quoted saying that the “next step is understanding how this type of technology fits ‘into everything from building medical records, tracking you, helping you manage any pharmaceuticals you use for your heart issues, even down to producing datasets for your cardiologist to remotely look at your data.'”

He seems to have moved on from that cautious optimism, instead adopting the move-fast-break-things grindset that spits untested self driving cars onto our roads and AI Hitlerbots into our feeds — all without our consent, of course.

As the race to profitability in AI heats up, the demand for real-world use cases is growing. And as it does, tech companies are faced with immense pressure to pump out its latest iteration, the next big boom.

But the consequences of corner-cutting in the medical world are steep, and big tech has shown time and again that it would rather rush its products to market and shunt social responsibility onto us — filling our schools with ahistorical Anne Frank bots and AI buddies that drive teens toward suicide and self-harm.

Deregulation like the kind Schweikert proposes is exactly how big tech gets away with these offenses, such as training GenAI models on patient records without consent. It does nothing to ensure that subject matter experts are involved at any step in the process, or that we thoroughly consider the common good before the corporate good.

And as our lawmakers hand these tech firms the keys to the kingdom, it’s often the most vulnerable who are harmed first — recall the bombshell revelation that the biggest and flashiest AI models are built on the backs of sweatshop workers.

When it comes to AI outpatient care, you don’t need to be Cory Doctorow to imagine a world of stratified healthcare — well, anymore than we already have — where the wealthiest among us have access to real, human doctors, and the rest of us are left with the unpredictable AI equivalent.

And in the era of Donald Trump’s full embrace of AI, it’s not hard to imagine another executive order or federal partnership making AI pharmacists a reality without that pesky oversight.

More on tech and drugs: Congress Furious With Mark Zuckerberg for Making Money From Illegal Drug Ads