"AI-enabled attacks are probably round the corner."

Bark Bark Bark

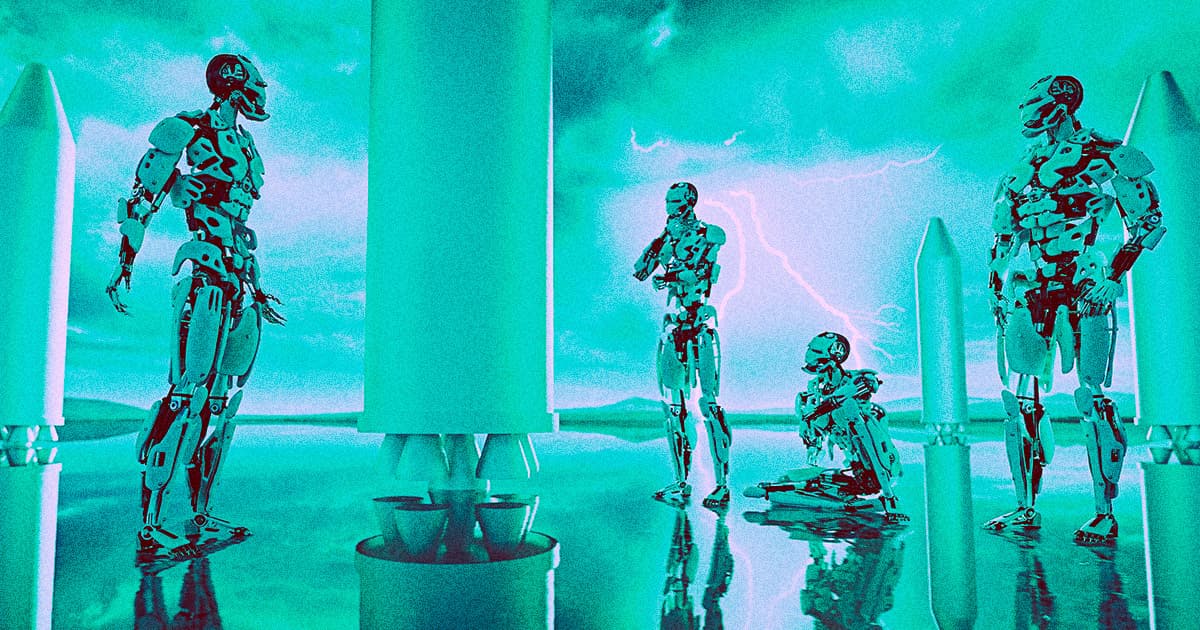

One of the UK's terrorism watchdogs is warning that human society may soon start to witness full-blown AI-assisted, or even AI-propagated, terrorism.

"I believe it is entirely conceivable that AI chatbots will be programmed — or, even worse, decide — to propagate violent extremist ideology," Jonathan Hall, who has served as the UK parliament's current Independent Reviewer or Terrorism Legislation since 2019, told the Daily Mail, ominously warning that "AI-enabled attacks are probably round the corner."

Extremists Wanted

Global terrorism experts have been discussing AI for some time now, although much of that discussion has been about using AI tools like facial recognition and other data-collection to prevent terrorism.

In recent months, however, that discussion has started to shift, as the growing public availability of increasingly powerful AI-powered technologies, such as deepfake tech and generative text and image AI systems, has stoked new concern regarding disinformation and terrorism.

Hall, for his part, makes a compelling case for those growing concerns, arguing that large language model (LLM) -powered tools like ChatGPT — designed to sound eloquent, confident, and convincing, regardless of what they're arguing for — could not only be a cheap and effective tool used by terrorists to sow chaos by way of AI-generated propaganda and disinfo, but even to recruit new extremists as well, including those who might be acting alone and are seeking validation and community online. And while that might sound pretty out-there, a chatbot has already allegedly played a role in causing a disaffected user to take his own life; how far away are we, really, from a chatbot validating a user's wish to harm others?

"Terrorism follows life," Hall told the Mail. "When we move online as a society, terrorism moves online."

Early Birds

Hall also made the point that terrorists tend to be "early tech adopters," he added, citing terrorist groups' "misuse of 3D-printed guns and cryptocurrency" as recent examples of bad actors using burgeoning tools to cause chaos and harm.

More, the lawyer says that because of how vague (and frankly, relatively nonexistent) the norms and laws regarding AI currrently are, AI terrorism may be particularly difficult to both track and prosecute. And that's already true now; what happens when, theoretically, a rogue AI escapes its guardrails and starts acting out on its own? It may never happen, but it's not outside the realm of possibility.

"The criminal law does not extend to robots, the AI groomer will go scot-free," Hall told the outlet, adding that the law often fails to "operate reliably when the responsibility is shared between man and machine."

So, you know. Happy Monday.

More on AI: Newspaper Alarmed When ChatGPT References Article It Never Published

Share This Article