In the public consciousness, OpenAI is the obvious winner of the meteoric AI boom. For a runner-up, you might consider Midjourney or Anthropic's Claude, a high-performing competitor to ChatGPT.

Whether any of those players will figure out how to effectively monetize that buzz is widely debated. But in the meantime, someone has to supply the hardware to run all that viral generative AI — and for now, that's where the money is.

Enter the Nvidia Corporation, a newly trillion dollar company that's making so much dough off its gangbusters AI chips that its revenue has more than doubled from last year, quickly becoming the undisputed backbone of the AI industry.

Per its latest quarterly earnings report, Nvidia's revenue now sits at a hefty $13.5 billion, and the spike in its profits is even more unbelievable: a nine times increase in net income year-over-year, shooting up to $6.2 billion.

In other words, there's no question that AI is a gold rush — and regardless of whether any of the prospectors hit pay dirt, it's currently Nvidia that's selling shovels.

That's a surprising twist. If you've heard of Nvidia in years past, it was more than likely for its gaming hardware. Now, selling parts to PC gamers accounts for only a modest portion of its colossal revenue.

That reality has been quietly shifting for a while. Before AI took the tech sector by storm over the past year, the first hint of Nvidia's strange new trajectory was arguably cryptocurrency. As Bitcoin and its ilk swelled in value over the past decade or so, aficionados quickly discovered that the company's graphics processing units (GPUs), long adept at summoning virtual worlds in a home computer, were powerful engines to mine crypto.

Predictably, they started to open huge server farms that devoured electricity, filtered it through GPUs, and churned out digital assets — sometimes tearing apart communities and leaving environmental destruction in their wake. Nvidia's leadership seemed nonplussed by the phenomenon, with the company's chief technology officer Michael Kagan saying earlier this year that the tech "doesn't bring anything useful for society."

But "AI does," he told The Guardian. "With ChatGPT, everybody can now create his own machine, his own program: you just tell it what to do, and it will."

So far, time is bearing out that thesis, at least financially. This quarter, Nvidia's AI hardware division took in a record $10.3 billion in revenue — over three quarters of its total sales, vastly outperforming crypto or gaming.

The company's success has arguably been a long time coming. Either through good luck or good planning, Nvidia got a healthy head start in AI hardware over its competition.

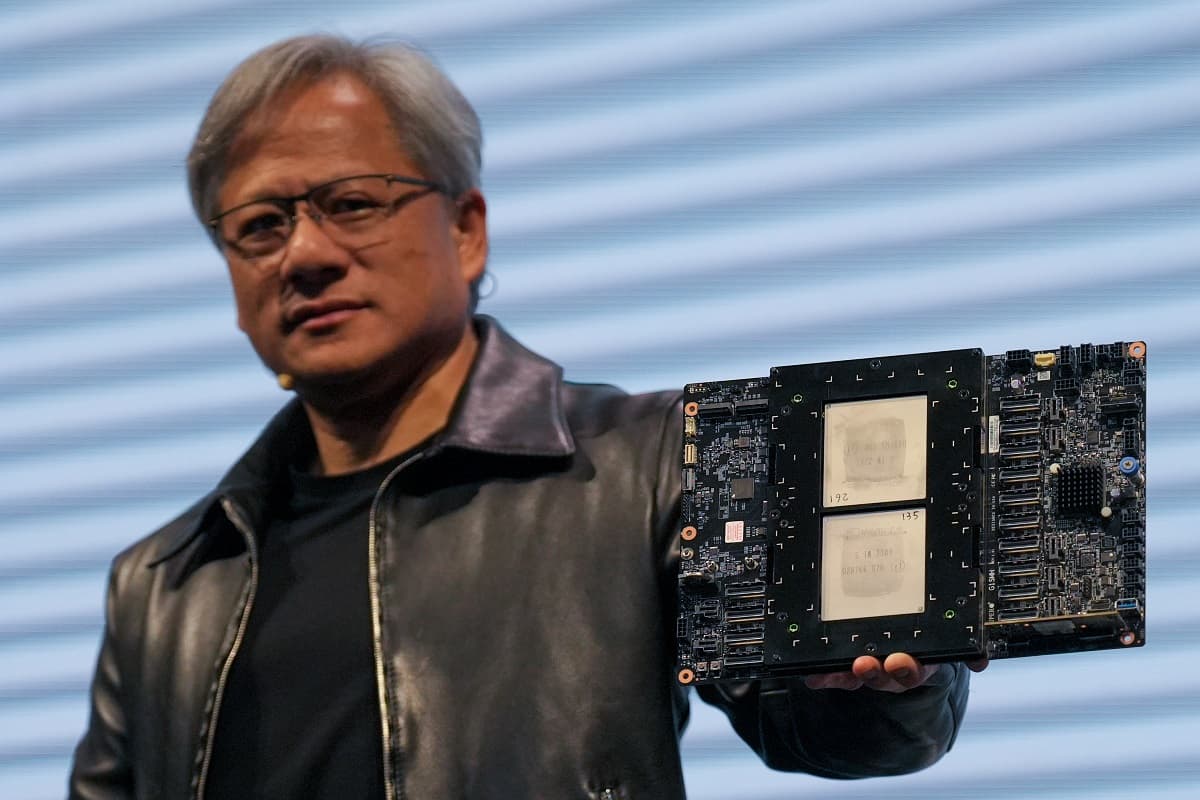

"We had the good wisdom to go put the whole company behind it," Nvidia CEO Jen-Hsun Huang said in an interview with CNBC in March. "We saw early on, about a decade or so ago, that this way of doing software could change everything," he added. "Every chip that we made was focused on artificial intelligence."

In 2006, still a juggernaut of gaming hardware, it released CUDA, a parallel computing platform that allowed power-hungry AI models to run on Nvidia GPUs far faster than those of its competition. Suddenly, developing AI became a lot cheaper, and a lot faster.

It took more than 15 years for the results generated by AI to catch up, but when they did, there was no arguing with results.

Today, Nvidia's flagship AI product is the H100 GPU, and pretty much everyone with their fingers in the generative AI pie wants to get their hands on as many as possible. And if not the H100, currently in short supply, then its predecessor the A100.

Notable customers include Microsoft, which reportedly spent north of several hundreds of millions of dollars buying thousands of A100 chips for OpenAI, as part of its $1 billion partnership with the then-startup in 2019. It was because of that investment — and Nvidia's hardware — that OpenAI was able to build ChatGPT. In fact, the hype has become so profound that entire countries are snapping up the chips: the Financial Times reported earlier this month that Saudi Arabia had bought 3,000 H100s, selling for up to $40,000 apiece, with the United Arab Emirates vacuuming up thousands more.

And so is everyone else. According to one estimate, Nvidia has now cornered up to 95 percent of the AI GPU market.

Needless to say, many are vying for Nvidia's throne. Other computer hardware heavyweights like AMD and Intel are currently dumping billions of dollars into developing their own machine learning processors. So are Google and Amazon. Even Microsoft, in the hopes of weaning off the GPU giant's pricey hardware, is reportedly creating an in-house AI chip.

Perhaps its competitors see an opening in Nvidia's lagging output. The H100, which only shipped last September, is still expected to be sold out through 2024, and to satiate the AI industry's voracious appetite, Nvidia allegedly plans to triple the production of it going forward.

At any rate, Nvidia's absolute bonanza of a year shows few signs of stopping in the near future. By its own projections, it's is set to steamroll into the next quarter with a steep climb in revenue to $16 billion. And that's striking — for all the headspinning hype around AI's capabilities, the source of the go-to muscle behind the technology has remained unchanged.

More on: Google’s Search AI Says Slavery Was Good, Actually

Share This Article