“Deep learning” is an artificial intelligence technology that allows facial recognition, and it has affected our social media tremendously. For example, Facebook can detect faces based from the photos that you upload. This may not seem all that revolutionary, but it can be a little handy and time saving.

But now, Microsoft researchers are planning to take “deep learning” to a whole new level.

How it all started

In 2012, artificial intelligence researchers from the University of Toronto won a competition called ImageNet. The contest consists of machines outdoing each other in relation to specific, set tasks. Researcher Alex Krizhevsky and professor Geoff Hinton used deep neural nets to provide extremely accurate image recognition programs.

Ultimately, their technology focused on identification by examining a large number of images and seeing trends, rather than following codes set by humans. It was no surprise that they topped the contest.

In the end, the Toronto-based researches provided a roadmap for other giant companies like Facebook, Twitter, and Google to follow their lead on this technology. “We can’t claim that our system ‘sees’ like a person does,” says Peter Lee, the head of research at Microsoft. “But what we can say is that for very specific, narrowly defined tasks, we can learn to be as good as humans.”

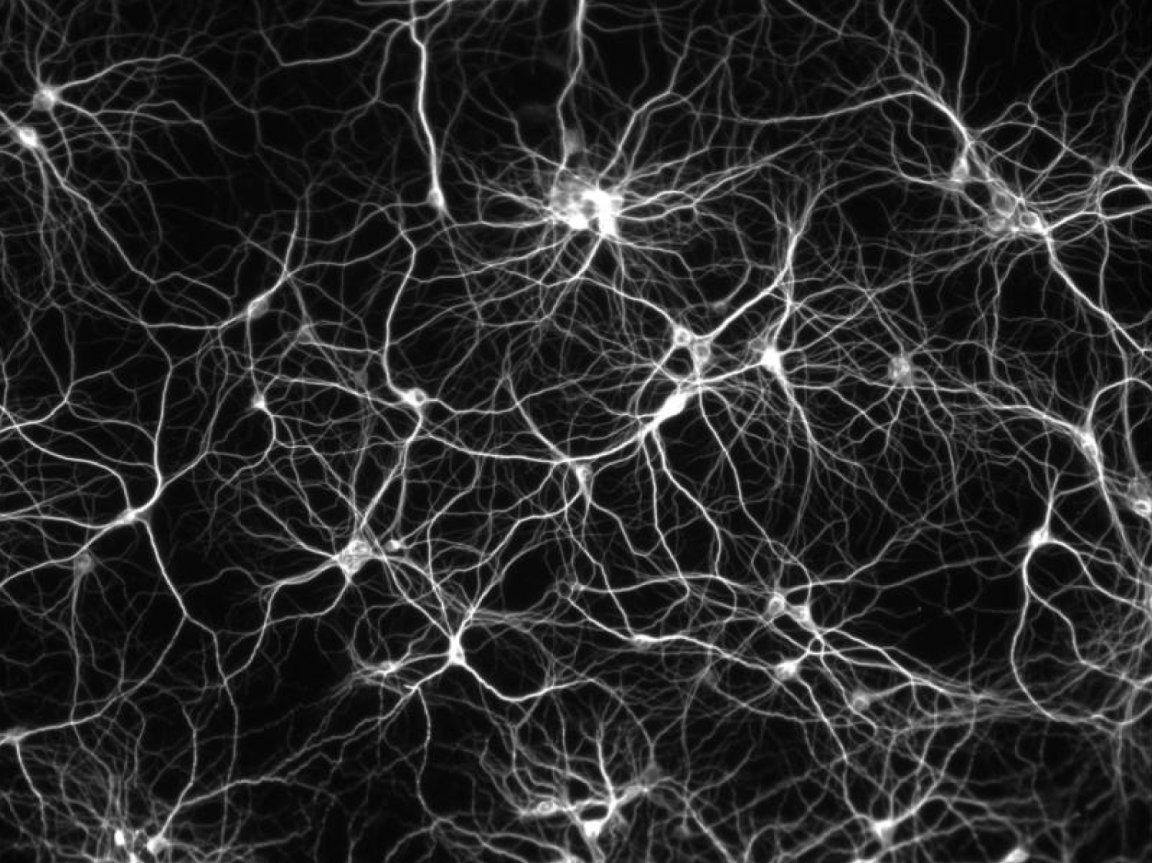

Neural nets use hardware and software to approximate the web of neurons in the human brain. The idea has been around since the 1980s, but it was only first built in 2012.

Krizhevsky and Hinton further developed the technology by running their neural nets atop GPUs. It was initially intended for games and other highly graphical software. However, it also fits nicely to the type of GPUs that big time companies such as Microsoft and Facebook. were looking for.

Both Krizhevsky and Hinton have joined Google.

A new approach to deep learning

Microsoft researchers took ImageNet’s winning technology and further developed it to what they call “deep residual network”. They’ve designed a neural net that’s significantly more complex than typical designs—one that spans 152 layers of mathematical operations, compared to the typical six or seven.

Deep neural networks consist of several layers, each having a different set of algorithms. The output of one layer becomes the input of the next layer. If it will be used for image recognition, one layer will first look at a particular set of images and then proceed to look for another set. “Generally speaking, if you make these networks deeper, it becomes easier for them to learn,” says Alex Berg, a researcher at the University of North Carolina who helps oversee the ImageNet competition.

In our current age of technology, as previously mentioned, there are normally six to seven layers, but it can reach up to 30 layers. So jumping to 152 is a huge leap.

Problems in the past constricted further image recognition due to algorithms skipping layers. “When you do this kind of skipping, you’re able to preserve the strength of the signal much further,” says Peter Lee, “and this is turning out to have a tremendous, beneficial impact on accuracy.”

Another hindrance to further expanding this is the tedious task of creating the correct algorithms per layer. Jian Sun explains researchers can identify a promising arrangement for massive neural networks, and then the system can cycle through a range of similar possibilities until it settles on this best one. “In most cases, after a number of tries, the researchers learn [something], reflect, and make a new decision on the next try,” he says. “You can view this as ‘human-assisted search.'”

In the future, it is predicted that AI may run so deep that they may even recognize human speech and understand our languages as we naturally speak them.