Moral Dilemma

A study shows that almost 60% of people are willing to ride in a self-driving car, but that might be because we still fail to realize the real implications of putting our lives and the lives of others in the hands of an autonomous vehicle.

Think about it. You’re in a self-driving car, cruising down the highway, when something goes wrong. Suddenly, you're in a life-or-death situation. Should the car save you or the people crossing the street? Should a self-driving car slam into a wall to save women, children, and the elderly? What if it's to save a couple of criminals instead?

These are the questions asked by MIT’s “Moral Machine.”

In the hopes of giving people a glimpse of the scenarios that could become a reality once self-driving technology becomes more mainstream, the university has created a 13-point exercise in which users weigh the ethical, life-and-death decisions that self-driving cars could face and consider whether the machines should even be in a position to make these decisions.

Analytics Behind Ethics

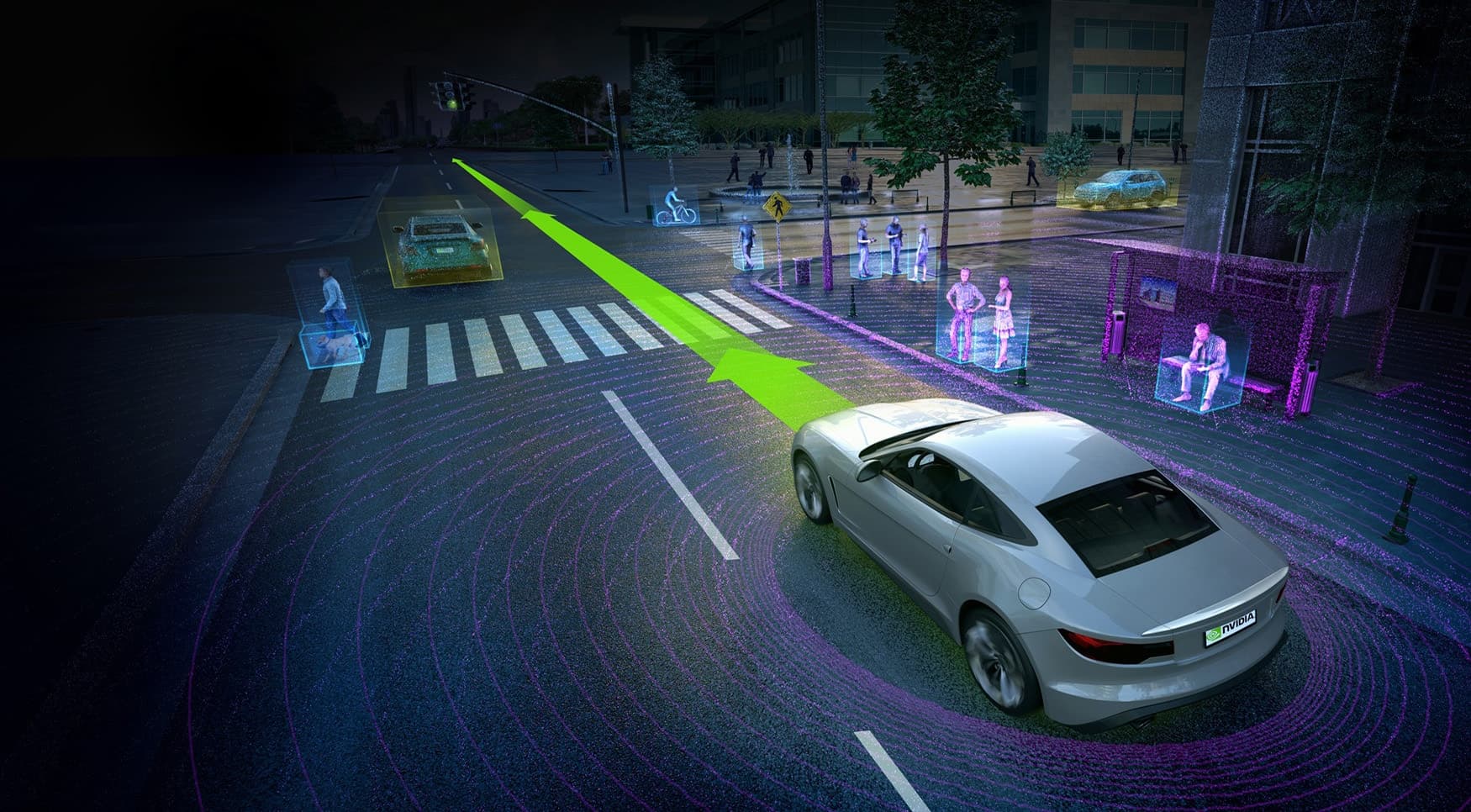

The nuances behind decisions such as these have yet to be built into current AI systems, including those powering autonomous cars. In potentially fatal situations, should a self-driving car take action if it means saving more lives? Or should it maintain its course and let fate take over?

Ultimately, what self-driving technology needs is a way for AI to make the difficult, ethical choices humans make every day, and one way to get there is by studying the patterns behind our moral decisions and applying those patterns to AI systems.

Given that engineers are already starting to code this complex kind of decision making into self-driving technology, there’s no better time than now to ponder these hypothetical scenarios. Through projects like MIT's "Moral Machine," people can still be metaphorically in control of our cars long after we give up the driver's seat to AI.

Share This Article