Mind Control

One reason humans fear robots — and autonomous technologies in general — is the potential for them to spiral out of our control (perhaps taking over the world while they're at it). Since we don't yet know exactly how technological singularity would play out, for now, robots taking over the world remains just science fiction.

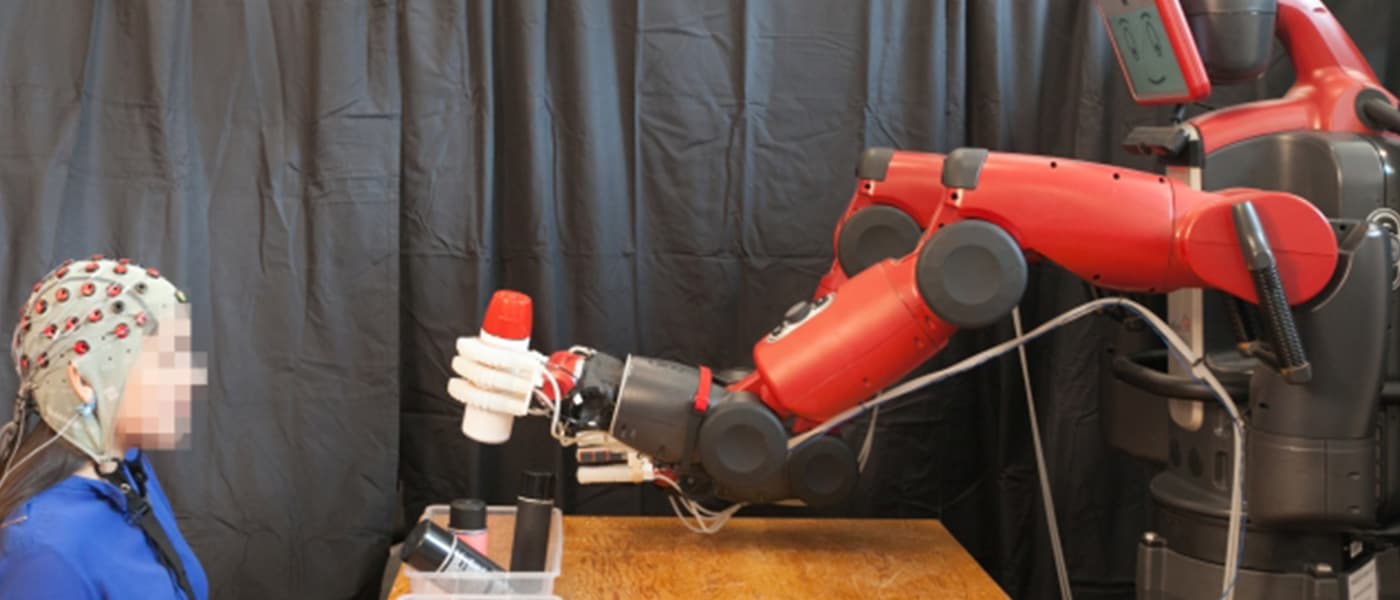

To that end, here's something that might appease those who worry a lot about machines taking over the world. Technology currently in development at Massachusetts Institute of Technology's (MIT) Computer Science and Artificial Intelligence Laboratory (CSAIL), together with researchers from Boston University, is working on a way to give robots command using brain signals. Essentially, this lets people control robots using only their minds.

Researchers created a feedback system that allows robots to correct their mistakes easily. This system uses brain activity data from an electroencephalography (EEG) monitor, scanning for brain signals called "error-related potentials" (ErrPs). ErrPs are generated whenever the brain notices a mistake.

This feedback mechanism works in real time, thanks to the team's machine-learning algorithms that makes the system capable of classifying brain waves in just 10 to 20 milliseconds. “As you watch the robot, all you have to do is mentally agree or disagree with what it is doing,” explained CSAIL director Daniela Rus, senior author of the team's research paper — which was accepted for the IEEE International Conference on Robotics and Automation (ICRA) which will take place in May. “You don’t have to train yourself to think in a certain way — the machine adapts to you, and not the other way around.”

Better Robots

Of course, monitoring ErrPs is just one piece of the puzzle. To be able to control a robot fully using the human brain, it would take more than than just reading a couple of brain signals. But this research on more intuitive human-robot interaction opens up possibilities for application in real-world setups that require a person to control robots. For example, managing robot assembly lines in factories or autonomous driving systems.

“Imagine being able to instantaneously tell a robot to do a certain action, without needing to type a command, push a button or even say a word,” Rus said. “A streamlined approach like that would improve our abilities to supervise factory robots, driverless cars, and other technologies we haven’t even invented yet.”

University of Freiburg computer science professor Wolfram Burgard commented on this technology's potential for "developing effective tools for brain-controlled robots and prostheses." Burgard, who wasn't part of the study, also added, "Given how difficult it can be to translate human language into a meaningful signal for robots, work in this area could have a truly profound impact on the future of human-robot collaboration."

Share This Article