Unveiling New Chips

Google is unveiling more and more about their revolutionary software, including the innovations behind the search Search and Street View functions. However, they haven't been as forthcoming in terms of their hardware.

But that just changed.

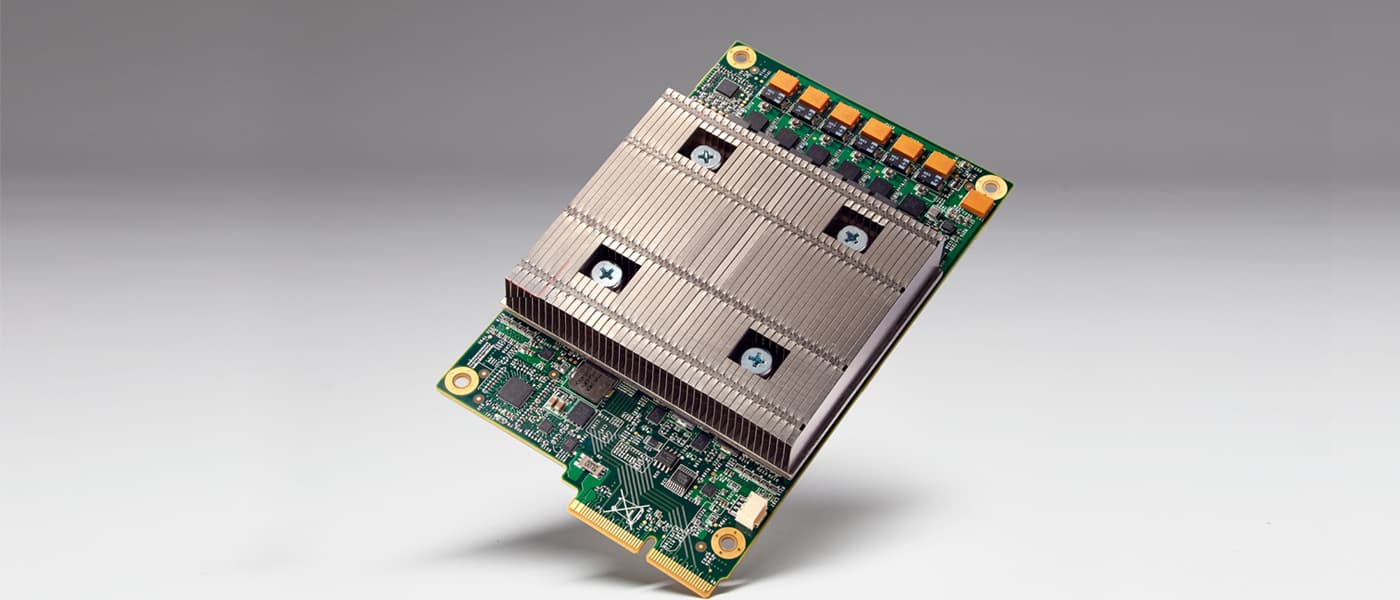

Google just unveiled one of its hardware brainchilds: the Tensor Processing Unit (TPU). The TPU is a custom ASIC created specifically for Google's open source software library for machine learning, TensorFlow.

According to Google's Cloud Platform Blog,

We’ve been running TPUs inside our data centers for more than a year, and have found them to deliver an order of magnitude better-optimized performance per watt for machine learning. This is roughly equivalent to fast-forwarding technology about seven years into the future (three generations of Moore’s Law).

TPU is tailored to machine learning applications, allowing the chip to be more tolerant of reduced computational precision, which means it requires fewer transistors per operation. Because of this, we can squeeze more operations per second into the silicon, use more sophisticated and powerful machine learning models and apply these models more quickly, so users get more intelligent results more rapidly. A board with a TPU fits into a hard disk drive slot in our data center racks.

The processor is tailored to machine learning applications, allowing the chip to be more tolerant of reduced computational precision, which means it requires fewer transistors per operation. Again, Google explains,

Because of this, we can squeeze more operations per second into the silicon, use more sophisticated and powerful machine learning models and apply these models more quickly, so users get more intelligent results more rapidly. A board with a TPU fits into a hard disk drive slot in our data center racks.

Chip Use

The TPU is already in use at Google, powering the software RankBrain used to optimize the relevancy of Google Search. Street View also gets a boost from TPUs, improving the accuracy and quality of maps and navigation.

Also, AlphaGo was powered by TPUs in the matches against Go world champion, Lee Sedol, enabling it to "think" much faster and look farther ahead between moves.

The end goal of the project is gaining an edge on the machine learning industry and making the technology available to Google customers. The use of TPUs in the company's infrastructure stack allows the power of Google to be available to developers across software like TensorFlow and Cloud Machine Learning with advanced acceleration capabilities.

Share This Article